Biography

I am now a master student in Tsinghua University, studying in IIG group in Shenzhen International Graduate School,

supervised by Prof. Yujiu Yang.

I received my bachelor degree in Department of Artificial Intelligence and Automation from Huazhong University of Science and Technology in 2021.

I am on track to graduate in June 2025 and intend to apply for a PhD program in Fall 2025.

Research Intersets

My research interests lie in Knowledge Distillation and LLM efficiency.

Recently, I have some works on LLM efficiency.

Publications and preprint

* indicates equal contribution.

-

LoCa: Logit Calibration for Knowledge Distillation

-

Runming Yang, Taiqiang Wu, Yujiu Yang.

-

ECAI 24' (Accepted)

[pdf]

-

LLM-Neo: Parameter Efficient Knowledge Distillation for Large Language Models

-

Runming Yang*, Taiqiang Wu*, Jiahao Wang, Pengfei Hu, Ngai Wong, Yujiu Yang.

-

ICASSP 25' (Under Review)

[model]

[Coming soon for pdf and code]

-

Rethinking Kullback-Leibler Divergence in Knowledge Distillation for Large Language Models

-

Taiqiang Wu, Chaofan Tao, Jiahao Wang, Runming Yang, Zhe Zhao, Ngai Wong.

-

COLING 25' (Under Review)

[pdf]

Internship

-

2023.11~Present, PCG, Tencent

Projects

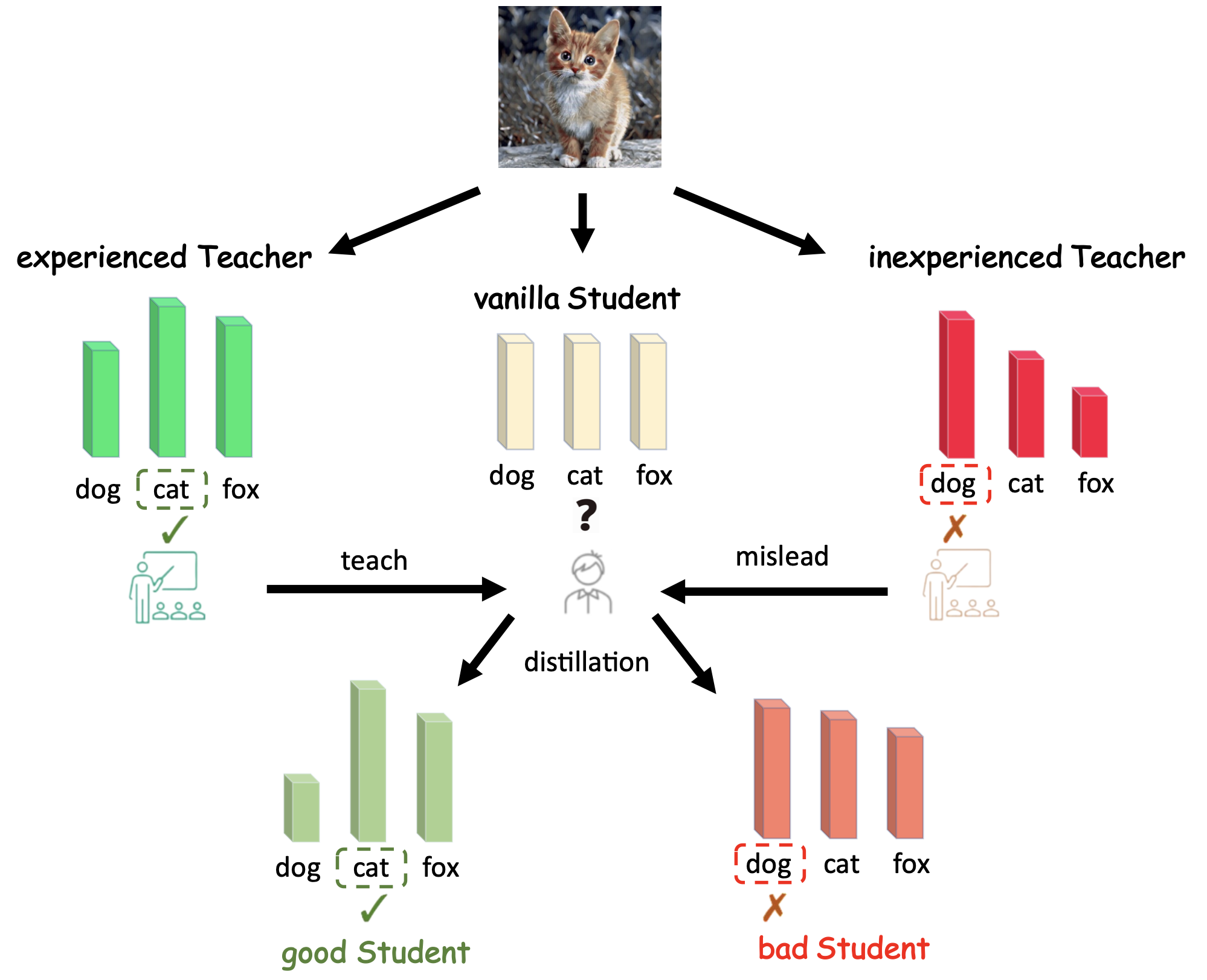

LoCa (ECAI 24')

Identify that misclassification by the teacher during distillation harms student learning.

Propose LoCa, calibrating the logits distribution of misclassified samples to ensure target label accuracy.

Conduct experiments on image classification and natural language generation tasks; easily extendable to LLMs.

Improve accuracy while adding only 1% computational cost as an enhanced plug-in.

arxiv

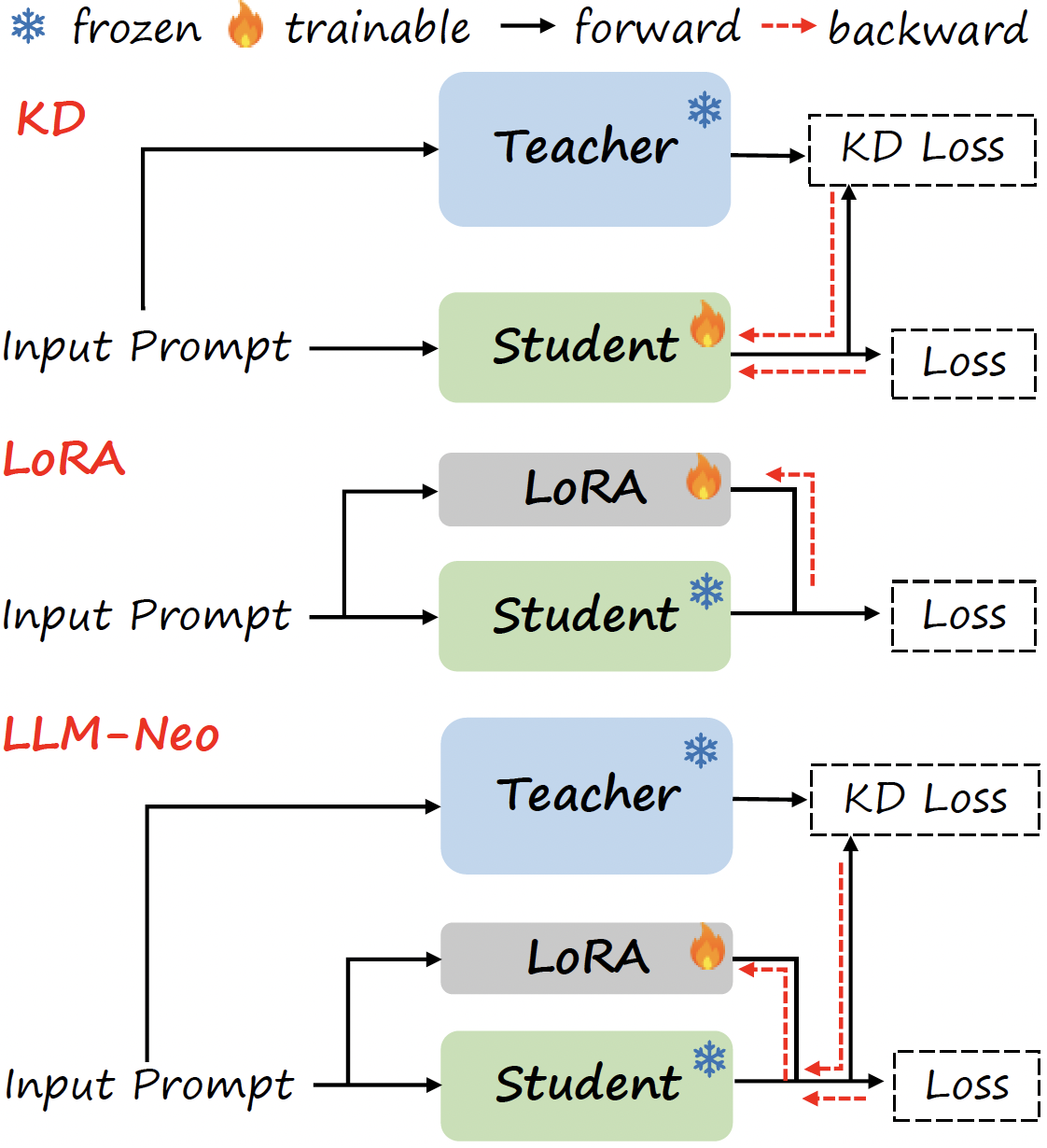

LLM-Neo (submitted to ICASSP 25')

Discover similarities between Knowledge Distillation and Low-rank Adapter methods in knowledge transfer for LLMs.

Combine both ideas and create a more efficient knowledge transfer strategy, LLM-Neo.

Demonstrate better performance on the Llama 3.1 series compared to SFT, LoRA, and KD

huggingface

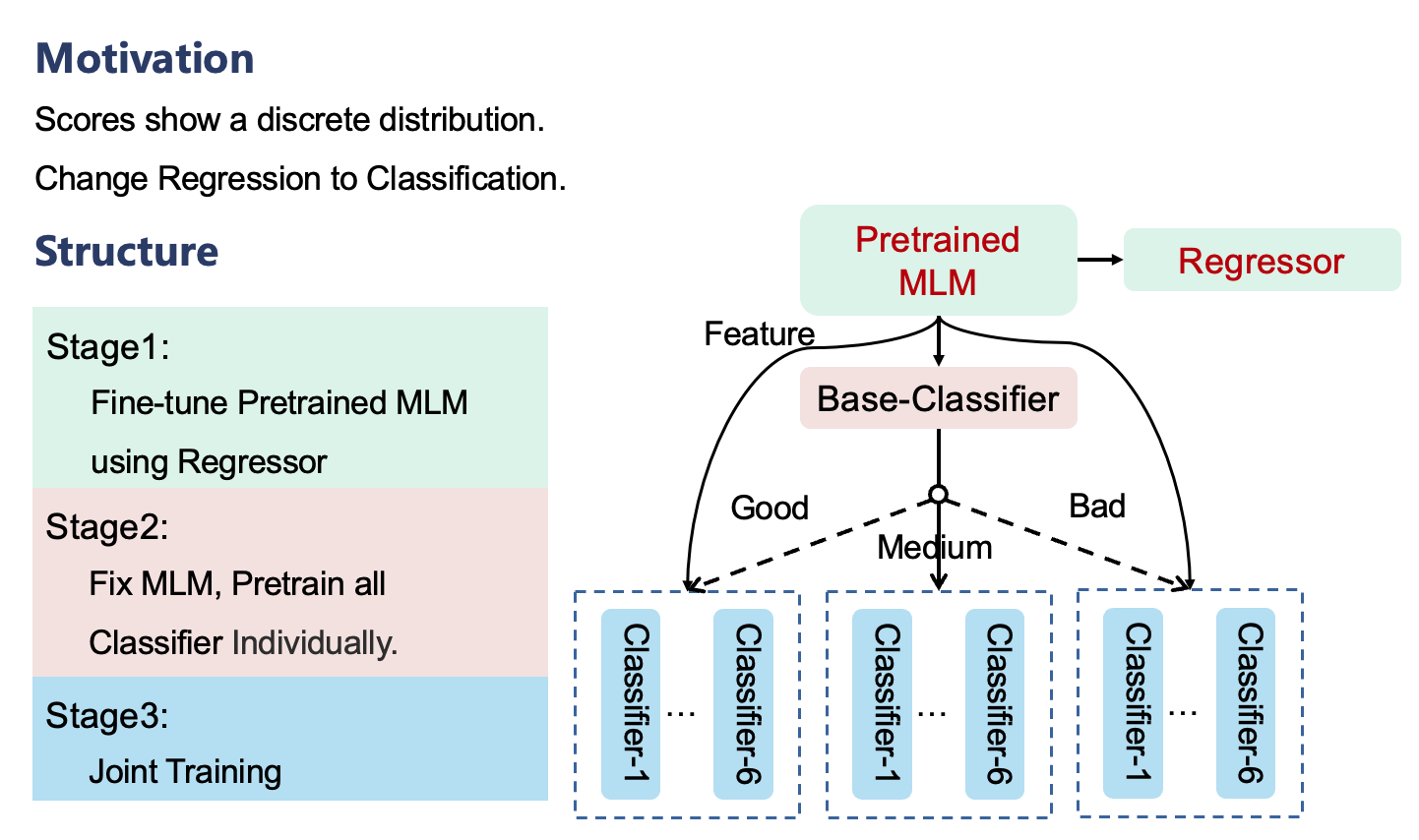

Kaggle (🥈Silver Medal)

Aim to assess juniors' English writing proficiency, which is few-samples and long-text.

Utilize techniques like data augmentation, token truncation and dropout regularization.

Achieve a silver medal via 8 models (i.e., RoBERTa) ensembled and 5-fold validation.

kaggle

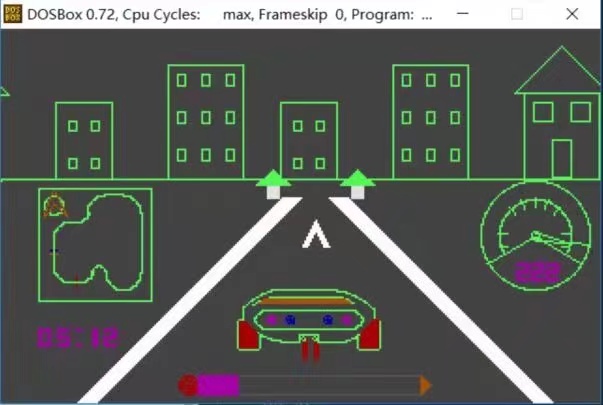

Need for Speed (HUST Undergraduate Course Design)

A copy of "Need for Speed" game using pure C language.

Pixel style, retro sentiment

Implemente key game mechanics, i.e., acceleration, turning, drifting, and collision handling.

(Last updated on September, 2024)

One more thing that excites me is meeting the gradient-color cloud and lonely tree. I'd like to capture them and share them with others!

Nanshan

Days of self-isolation at hotel

Hebei

Same perspective before and after basketball

Hebei

Street corner but nobody

HUST

Street with students